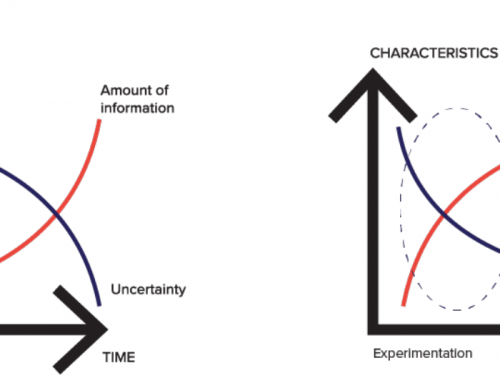

Data is vast and inherently hard to understand. So naturally, certain technologies, algorithms, and practices have been developed to help enhance the ways in which we understand, and ultimately use, data. The current trend states that all data is inherently valuable, however, this very much depends on what is considered to be of value. Algorithms built from selective data points, or features, are often used to predict the future. One such example is called time series analysis. Time series can be considered one of the most important means of processing data, especially in situations where historic data exists, or the expected value output requires actions to be understood over periods of time.

Time series analysis is a method of analysing data which maps out an action, usually in a place, across a stretch of time. This would typically encompass a period of time lasting a week, or a month, or a year; depending on the needs for expected value, or the limitations of the system from which the data is collected. In this sense, data can be analysed in an annual cyclical time-frame when attempting to understand the seasonal trends of a product, or holiday based activities. It can, however, also be defined by system processing and storage needs, such as the weekly batch drops of most telecommunications data, or the monthly cycles in banking.

Something that is important to note at this point is that time series analysis only works when there are three main factors present in the data: Time, Place and Act. The Time factor could be defined by a few different variants. It could be just a marker indicating the year in which a data point was generated. It could be more detailed too, with months, hours and seconds. Or it could be a more abstract representation specific to a region or business, such as seasons or terms. The Place factor also has a few different variants depending on the nature of the sector, where the data comes from, or the prediction requirements. Place could indicate one or more specific locations, whether physical or virtual. It could also indicate a human in order to understand the Time, Place, Act relationship of a customer for a given business. Act is the indication of an action in a dataset, and it is the focus in time series analysis. The act could be any number of things, from a customer purchase, an interaction in an app or website, or the creation of an account or product activation. It is the frequency, and location of the action that is the primary purpose of time series analysis. Furthermore, the Act factor must indicate an individualised action, otherwise the analysis is of no real value. For example, analysing the frequency of accepted cookies when your cookies banner obscures a webpage is superfluous. Time, Place, and Act are important because it helps to identify the fundamental changes in human behaviour over time, in various locations, with different types of actions.

Time series data is invaluable in initial analyses done by the data science team in a business. A hypothetical baseline can be drawn upon in the initial analysis, which requires human eyes and intellect to understand. From there, models can be built out from features in the data that will allow for further analyses to be performed through machine learning. With the right models, artificial intelligence can automate the time series process in order to map out when actions would occur, more or less frequently, in a given situation. This time-based data and subsequent analysis allows for the prediction of acts performed in time, and/or in specific locations.

As aforementioned, time series analysis is a vital method for predicting future actions, trends, or changes. It can be implemented in a company reviewing a product a year after launch, or to predict how a product or service launch may go. This type of data analysis can also be used for competitor analysis, to determine whether a competitor’s product or service may interfere or enhance a possible new release. There is an array of models used for time series analysis, which can all be found in the ecosystem.Ai Workbench. Each of them has specific application specialties depending on the requirements for the prediction, or the data available. A Bayesian Time Varying Coefficient (BTVC) can be used to understand model parameter differences in a generalised way, using statistical measures to balance out coefficients. Exponential Smoothing (ETS) is quite commonly used in time series in situations where seasonal accountabilities need to be made. ETS will adjust coefficient variances based on passing time, while other models like BTVC balance out the parameters. One other example is the Damped Local Trend (DLT) model which, similar to ETS, will smooth out time based variances. However, the trend component curve is damped rather than being linear, which is used to understand data trends with time variances that are not seasonal. Essential to any business or individual who wishes to successfully forecast their business future, these models can be used for analysis in time based predictions. Provided the data available contains information about the Time, Place and Act of a transaction.

There are an overwhelming number of statements made about the value of data. Accompanying that, is an overwhelming number of ways it can be used. Depending on the expected business outcome. The ways in which value can be extracted is determined entirely by the needs of the predictions. Time series analysis is a way to extract important features for predictions that rely on time based variances. These variances could include notations of seasonal or web interaction trends. Time series algorithms come in many forms, from the statistical balancing of model parameters, to attributing time based variances that account for seasons. The data that is vital to performing time analysis, or implementing time based machine learning, is the Time, Place and Act factors. Without which, there is no way to identify the changes in behaviour that lead to accurate predictions.

- Jessica Nicole

- Daniel Nordfors